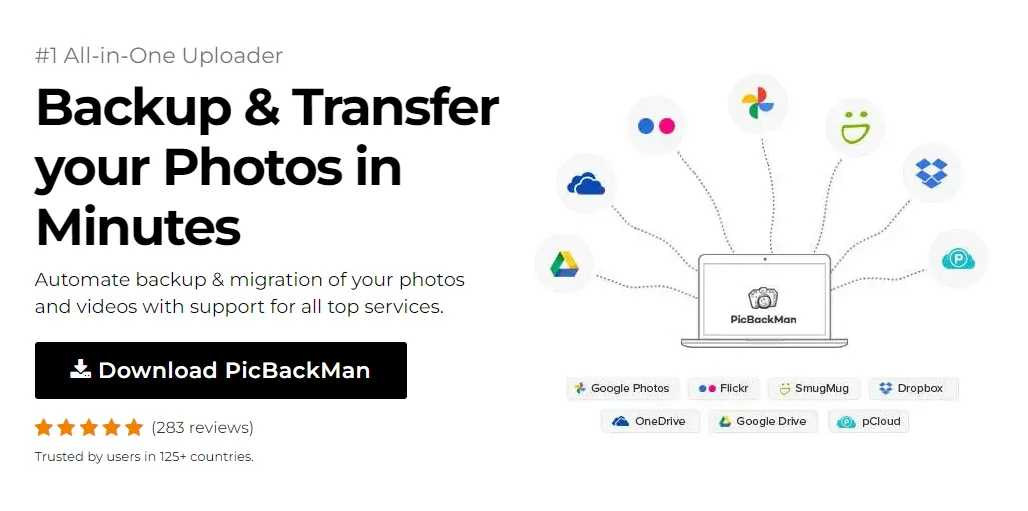

Why is it the #1 bulk uploader?

- Insanely fast!

- Maintains folder structure.

- 100% automated upload.

- Supports RAW files.

- Privacy default.

How can you get started?

Download PicBackMan and start free, then upgrade to annual or lifetime plan as per your needs. Join 100,000+ users who trust PicBackMan for keeping their precious memories safe in multiple online accounts.

“Your pictures are scattered. PicBackMan helps you bring order to your digital memories.”

How to Move Photos and Videos from Amazon S3 to Google Drive?

Need to transfer your media files from Amazon S3 to Google Drive? You're not alone. Many people find themselves wanting to move their photos and videos between these popular cloud storage platforms. Whether you're switching services, consolidating your files, or just creating backups, this guide will walk you through the entire process step by step.

I've personally helped numerous clients migrate their media libraries between cloud services, and I'm going to share the most reliable methods that work in 2023. By the end of this article, you'll have all the knowledge needed to successfully move your photos and videos from Amazon S3 to Google Drive without losing quality or metadata.

Why Transfer Files from Amazon S3 to Google Drive?

Before diving into the how-to, let's quickly look at why you might want to make this move:

- Cost considerations - Google Drive may offer better pricing for your specific storage needs

- Collaboration features - Google Drive excels at sharing and collaboration

- Integration with other services - You might use other Google services that work seamlessly with Drive

- User interface preferences - Some find Google Drive more intuitive for managing media files

- Backup strategy - Creating redundancy across multiple cloud platforms

Whatever your reason, the methods below will help you complete the transfer efficiently.

Methods to Transfer Photos and Videos from S3 to Google Drive

There are several approaches to moving your media from Amazon S3 to Google Drive. I'll cover the four most practical methods:

- Manual download and upload

- Using third-party transfer services

- Command-line tools (AWS CLI and Google Drive API)

- Custom scripts for automated transfers

Let's explore each method in detail, starting with the simplest approach.

Method 1: Manual Download and Upload

This straightforward method involves downloading files from Amazon S3 to your local computer, then uploading them to Google Drive. While simple, it works best for smaller file collections.

Step-by-Step Process:

- Log in to your AWS Management Console

- Navigate to the S3 service

- Locate your bucket containing photos and videos

- Select the files you want to transfer

- Click the "Download" button to save them to your computer

- Open Google Drive in your browser

- Click "New" and select "File upload" or "Folder upload"

- Select the downloaded files from your computer

- Wait for the upload to complete

Pros and Cons of Manual Transfer

| Pros | Cons |

|---|---|

| No technical knowledge required | Time-consuming for large collections |

| Complete control over which files transfer | Requires sufficient local storage space |

| Can verify files during the process | Internet connection issues can disrupt transfers |

| No additional tools needed | Not suitable for automated or scheduled transfers |

Method 2: Using Third-Party Transfer Services

Third-party services can streamline the transfer process by connecting directly to both your Amazon S3 and Google Drive accounts, eliminating the need for local downloads and uploads.

Popular Transfer Services:

- MultCloud

- CloudHQ

- Mover.io (now part of Microsoft)

- Cloudsfer

- Otixo

How to Use MultCloud for S3 to Google Drive Transfer:

- Create an account on MultCloud.com

- Click “Add Cloud” and select Amazon S3

- Enter your AWS credentials to connect your S3 account

- Add Google Drive using the same “Add Cloud” option

- Go to “Cloud Transfer” in the MultCloud dashboard

- Select Amazon S3 as the source and navigate to your bucket

- Choose Google Drive as the destination and select a folder

- Configure transfer options (schedule, filters, etc.)

- Click "Transfer Now" to begin the process

Using CloudHQ:

- Sign up for a CloudHQ account

- Select "Sync Pair" from the dashboard

- Choose Amazon S3 as your source

- Authenticate and connect to your S3 account

- Select Google Drive as your destination

- Connect to your Google Drive account

- Configure sync options and filters

- Start the sync process

Pros and Cons of Third-Party Services

| Pros | Cons |

|---|---|

| No local storage required | May involve subscription costs |

| Faster transfers (cloud-to-cloud) | Privacy concerns with third-party access |

| Scheduling and automation options | Potential service limitations on free plans |

| User-friendly interfaces | Dependency on the third-party service's reliability |

Method 3: Command-Line Tools (AWS CLI and Google Drive API)

For those comfortable with command-line interfaces, using AWS CLI and Google Drive API offers powerful and flexible transfer options.

Prerequisites:

- AWS CLI installed and configured

- Python installed

- Google API client library for Python

- Google Drive API credentials

Step-by-Step Process:

- Install AWS CLI if not already installed:

pip install awscli

- Configure AWS CLI with your credentials:

aws configure

- Install Google API client library:

pip install google-api-python-client oauth2client

- Set up Google Drive API credentials:

- Go to Google Cloud Console

- Create a new project

- Enable the Google Drive API

- Create credentials (OAuth client ID)

- Download the credentials JSON file

- Download files from S3 to a temporary directory:

aws s3 cp s3://your-bucket-name/path/to/files/ ./temp-folder/ --recursive

- Use a Python script to upload to Google Drive (example below)

Example Python Script for Uploading to Google Drive:

from googleapiclient. discovery import build

from googleapiclient.http import MediaFileUpload

from oauth2client.client import OAuth2WebServerFlow

from oauth2client. tools import run_flow

from oauth2client.file import Storage

import os

# Set up OAuth 2.0 credentials

CLIENT_ID = 'your-client-id'

CLIENT_SECRET = 'your-client-secret'

OAUTH_SCOPE = 'https://www.googleapis.com/auth/drive'

REDIRECT_URI = 'urn:ietf:wg:oauth:2.0:oob'

# Path to store authentication credentials

CREDS_FILE = 'credentials.json'

# Authenticate and build the Drive service

flow = OAuth2WebServerFlow(CLIENT_ID, CLIENT_SECRET, OAUTH_SCOPE, redirect_uri=REDIRECT_URI)

storage = Storage(CREDS_FILE)

credentials = storage.get()

if not credentials or credentials.invalid:

credentials = run_flow(flow, storage)

drive_service = build('drive', 'v3', credentials=credentials)

# Function to upload a file to Google Drive

def upload_file(filename, filepath, mime_type, parent_folder_id=None):

file_metadata = {'name': filename}

if parent_folder_id:

file_metadata['parents'] = [parent_folder_id]

media = MediaFileUpload(filepath, mimetype=mime_type, resumable=True)

file = drive_service.files().create(

body=file_metadata,

media_body=media,

fields='id'

execute()

print(f"File ID: {file.get('id')} - {filename} uploaded successfully")

# Example usage

# First, create a folder in Google Drive

folder_metadata = {

'name': 'S3 Media Files',

'mimeType': 'application/vnd.google-apps.folder'

}

folder = drive_service.files().create(body=folder_metadata, fields='id').execute()

folder_id = folder.get('id')

print(f"Created folder with ID: {folder_id}")

# Upload all files from the temp folder

temp_folder = './temp-folder/'

for filename in os.listdir(temp_folder):

filepath = os.path.join(temp_folder, filename)

if os.path.isfile(filepath):

# Determine mime type based on file extension

if filename.lower().endswith(('.png', '.jpg', '.jpeg')):

mime_type = 'image/jpeg'

elif filename.lower().endswith(('.mp4', '.mov', '.avi')):

mime_type = 'video/mp4'

else:

mime_type = 'application/octet-stream'

upload_file(filename, filepath, mime_type, folder_id)

Pros and Cons of Command-Line Method

| Pros | Cons |

|---|---|

| Highly customizable | Requires technical knowledge |

| Can be automated with scripts | Setup process can be complex |

| No recurring costs | Requires maintenance of scripts and credentials |

| Complete control over the transfer process | Debugging issues can be challenging |

Method 4: Custom Scripts for Automated Transfers

For ongoing transfers or large collections, creating a custom script that directly connects S3 to Google Drive can be the most efficient solution.

Advanced Python Script for Direct S3 to Google Drive Transfer:

import boto3

import os

import tempfile

from google.oauth2.credentials import Credentials

from google_auth_oauthlib.flow import InstalledAppFlow

from google.auth.transport.requests import Request

from googleapiclient. discovery import build

from googleapiclient.http import MediaFileUpload

import pickle

# S3 configuration

S3_BUCKET_NAME = 'your-bucket-name'

S3_PREFIX = 'path/to/files/' # Optional prefix to filter files

# Google Drive configuration

SCOPES = ['https://www.googleapis.com/auth/drive']

TOKEN_PICKLE = 'token.pickle'

CREDENTIALS_FILE = 'credentials.json'

GOOGLE_DRIVE_FOLDER_ID = 'your-folder-id' # Optional: ID of destination folder

def get_google_drive_service():

creds = None

# Check if token. pickle exists with saved credentials

if os.path.exists(TOKEN_PICKLE):

with open(TOKEN_PICKLE, 'rb') as token:

creds = pickle.load(token)

# If credentials don't exist or are invalid, get new ones

if not creds or not creds.valid:

if creds and creds.expired and creds.refresh_token:

creds.refresh(Request())

else:

flow = InstalledAppFlow.from_client_secrets_file(CREDENTIALS_FILE, SCOPES)

creds = flow.run_local_server(port=0)

# Save credentials for future use

with open(TOKEN_PICKLE, 'wb') as token:

pickle.dump(creds, token)

# Build and return the Drive service

return build('drive', 'v3', credentials=creds)

def guess_mime_type(filename):

""Guess MIME type based on file extension""

extension = os.path.splitext(filename)[1].lower()

if extension in ['.jpg', '.jpeg', '.jpe']:

return 'image/jpeg'

elif extension == '.png':

return 'image/png'

elif extension == '.gif':

return 'image/gif'

elif extension in ['.mp4', '.m4v', '.mpg', '.mpeg']:

return 'video/mp4'

elif extension in ['.mov', '.qt']:

return 'video/quicktime'

elif extension == '.avi':

return 'video/x-msvideo'

else:

return 'application/octet-stream'

def transfer_s3_to_drive():

# Initialize S3 client

s3 = boto3.client('s3')

# Initialize Google Drive service

drive_service = get_google_drive_service()

# List objects in S3 bucket

if S3_PREFIX:

response = s3.list_objects_v2(Bucket=S3_BUCKET_NAME, Prefix=S3_PREFIX)

else:

response = s3.list_objects_v2(Bucket=S3_BUCKET_NAME)

# Check if there are any objects

if 'Contents' not in response:

print(f"No objects found in {S3_BUCKET_NAME}/{S3_PREFIX}")

return

# Process each object

for item in response['Contents']:

key = item['Key']

filename = os.path.basename(key)

# Skip if not a file or if it's a directory marker

if not filename or filename.endswith('/'):

continue

print(f"Processing {key}...")

# Create a temporary file

with tempfile.NamedTemporaryFile(delete=False) as temp_file:

temp_path = temp_file.name

try:

# Download file from S3 to temporary location

s3.download_file(S3_BUCKET_NAME, key, temp_path)

# Prepare file metadata for Google Drive

file_metadata = {'name': filename}

if GOOGLE_DRIVE_FOLDER_ID:

file_metadata['parents'] = [GOOGLE_DRIVE_FOLDER_ID]

# Determine MIME type

mime_type = guess_mime_type(filename)

# Upload file to Google Drive

media = MediaFileUpload(temp_path, mimetype=mime_type, resumable=True)

file = drive_service.files().create(

body=file_metadata,

media_body=media,

fields='id,name'

execute()

print(f"Uploaded {filename} to Google Drive with ID: {file.get('id')}")

except Exception as e:

print(f"Error processing {key}: {str(e)}")

finally:

# Clean up temporary file

if os.path.exists(temp_path):

os.unlink(temp_path)

if __name__ == "__main__":

transfer_s3_to_drive()

Setting Up Automated Transfers with Cron Jobs

To run your transfer script automatically on a schedule:

- Save your script as

s3_to_drive_transfer.py - Make the script executable:

chmod +x s3_to_drive_transfer.py

- Edit your crontab:

crontab -e

- Add a schedule (eg, daily at 2 AM):

0 2 * * * /path/to/python /path/to/s3_to_drive_transfer.py >> /path/to/transfer_log.txt 2>&1

Pros and Cons of Custom Scripts

| Pros | Cons |

|---|---|

| Maximum flexibility and customization | Requires programming knowledge |

| Can handle complex transfer logic | Time-consuming to develop and test |

| Fully automated operation | Maintenance responsibility falls on you |

| No third-party service dependencies | Potential API changes may break functionality |

Handling Large Media Collections

When transferring large collections of photos and videos, you'll need to consider several factors to ensure successful transfers:

Breaking Down Large Transfers

Instead of trying to move everything at once, consider these approaches:

- Transfer files in batches (eg, 1000 files at a time)

- Organize transfers by folder or date

- Prioritize important files first

- Use parallel transfers when possible

Dealing with Bandwidth Limitations

To manage bandwidth effectively:

- Schedule transfers during off-peak hours

- Use cloud-to-cloud transfers to avoid local bandwidth bottlenecks

- Consider rate-limiting your transfers to prevent network saturation

- Use a wired connection rather than Wi-Fi for local transfers

Verifying Transfer Integrity

Always verify that your files transferred correctly:

- Compare file counts between source and destination

- Check file sizes match

- For critical files, verify checksums (MD5 or SHA-256)

- Spot-check random files by opening them

Maintaining File Organization

Preserving your organizational structure during transfers is crucial for finding files later.

Preserving Folder Structures

Most methods allow you to maintain your folder hierarchy:

- When using AWS CLI, include the

--recursiveflag - With third-party services, enable the option to preserve folder structure

- In custom scripts, recreate the folder structure in Google Drive

Handling Metadata and File Properties

Media files often contain important metadata:

- EXIF data in photos (date taken, camera info, location)

- Video metadata (resolution, frame rate, creation date)

- Custom tags and descriptions

To preserve this data:

- Use direct file transfers rather than converting formats

- Check if your transfer service supports metadata preservation

- For critical metadata, consider using specialized tools that specifically handle media metadata

Cost Considerations

Be aware of potential costs when transferring between cloud services:

Amazon S3 Egress Charges

Amazon charges for data leaving their network:

- First 1 GB per month is free

- Up to 10 TB: $0.09 per GB

- Next 40 TB: $0.085 per GB

- Next 100 TB: $0.07 per GB

For a 100 GB media collection, you could pay around $9 in egress fees.

Google Drive Storage Costs

Ensure you have sufficient Google Drive storage:

- Free tier: 15 GB (shared across Google services)

- Google One 100 GB: $1.99/month

- Google One 200 GB: $2.99/month

- Google One 2 TB: $9.99/month

Third-Party Service Fees

Many cloud transfer services have tied pricing:

- Free plans: Limited transfers (often 5-10 GB)

- Basic plans: $5-10/month

- Business plans: $15-30/month

Troubleshooting Common Issues

Even with careful planning, you may encounter problems during transfers.

Failed Transfers

If your transfer fails:

- Check your internet connection

- Verify your credentials for both services are valid

- Look for error messages in logs

- Try transferring a small batch to isolate the issue

- Ensure you have sufficient permissions on both services

Missing Files After Transfer

If files are missing after transfer: