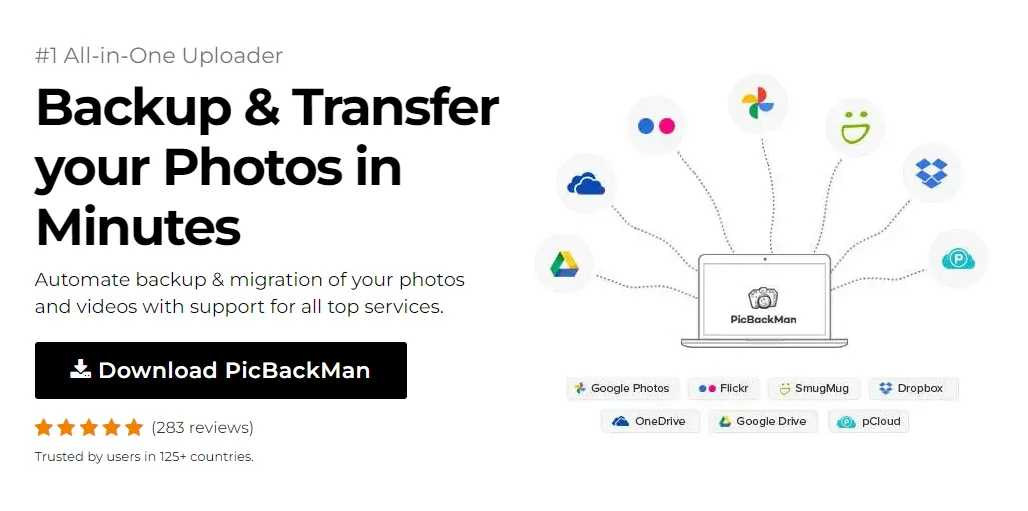

Why is it the #1 bulk uploader?

- Insanely fast!

- Maintains folder structure.

- 100% automated upload.

- Supports RAW files.

- Privacy default.

How can you get started?

Download PicBackMan and start free, then upgrade to annual or lifetime plan as per your needs. Join 100,000+ users who trust PicBackMan for keeping their precious memories safe in multiple online accounts.

“Your pictures are scattered. PicBackMan helps you bring order to your digital memories.”

AWS S3 to S3 Data Transfer in 5 Free Ways - Steps with images

Transferring data between Amazon S3 buckets is a common task for many AWS users. Whether you're migrating data, creating backups, or reorganizing your storage structure, knowing how to move files between S3 buckets efficiently can save you time and money. In this guide, I'll walk you through five completely free methods to transfer data from one S3 bucket to another.

What's great is that these methods don't require any additional AWS services that might add to your bill. Let's dive into these practical solutions that you can implement right away!

Why Transfer Data Between S3 Buckets?

Before we get into the how-to part, let's quickly look at some common reasons why you might need to transfer data between S3 buckets:

- Reorganizing your storage architecture

- Creating data backups in different regions

- Moving data to comply with data residency requirements

- Sharing data with other AWS accounts

- Setting up staging and production environments

- Migrating to a new AWS account

Now, let's look at the five free methods to transfer your S3 data.

Method 1: Using AWS CLI for S3 to S3 Transfer

The AWS Command Line Interface (CLI) is a powerful tool that lets you interact with AWS services directly from your terminal. It's perfect for S3 to S3 transfers, especially when you need to move multiple files or entire buckets.

Setting Up AWS CLI

If you haven't installed AWS CLI yet, follow these steps:

- Download and install AWS CLI from the official AWS website

- Configure AWS CLI with your credentials by running:

aws configure - Enter your AWS Access Key ID, Secret Access Key, default region, and output format

Basic S3 to S3 Copy Command

To copy a single file from one bucket to another:

aws s3 cp s3://source-bucket/file.txt s3://destination-bucket/

Copying Multiple Files or Entire Buckets

To copy all contents from one bucket to another, use the synccommand:

aws s3 sync s3://source-bucket/ s3://destination-bucket/

Advanced CLI Options for Better Control

Here are some useful options to enhance your S3 transfers:

--excludeand--include: Filter files based on patterns--delete: Remove files in the destination that don't exist in the source--storage-class: Specify the storage class for the copied objects--acl: Set access control for the copied objects

Example of copying only PDF files:

aws s3 cp s3://source-bucket/ s3://destination-bucket/ --recursive --exclude "*" --include "*.pdf"

Method 2: Using S3 Batch Operations

S3 Batch Operations is a built-in feature that allows you to perform operations on large numbers of S3 objects with a single request. This is particularly useful for large-scale transfers.

Setting Up a Batch Operation Job

Here's how to set up a batch operation for S3 to S3 transfer:

- Create a manifest file listing all objects you want to copy

- Create an IAM role with appropriate permissions

- Configure and submit the batch job through the AWS Management Console or AWS CLI

Step-by-Step Guide for Batch Operations

Let's break down the process:

- Generate an inventory report of your source bucket or create a CSV manifest file

- Go to the S3 console and select “Batch Operations” from the left sidebar

- Click "Create job" and select your manifest file

- Choose "Copy" as the operation type

- Specify your destination bucket

- Configure job options and permissions

- Review and create the job

The advantage of batch operations is that AWS handles the execution, retry logic, and tracking for you.

Method 3: Cross-Region Replication (CRR) or Same-Region Replication (SRR)

S3 replication allows you to automatically copy objects from one bucket to another, either in the same region (SRR) or across different regions (CRR).

Setting Up Replication Rules

- Go to the source bucket in the S3 console

- Click on "Management" tab and then "Replication"

- Click "Add rule"

- Configure the rule scope (entire bucket or with prefix/tags)

- Select the destination bucket

- Set up IAM role (you can let AWS create one for you)

- Configure additional options if needed

- Save the rule

Limitations and considerations

While replication is powerful, be aware of these limitations:

- Replication only applies to new objects after the rule is set up

- Existing objects won't be replicated automatically

- Delete markers can be replicated, but deletions with version IDs are not

- Objects encrypted with customer-provided keys (SSE-C) can't be replicated

Replicating Existing Objects

To replicate existing objects, you'll need to use S3 Batch Replication:

- Go to the "Replication" section of your source bucket

- Select the "Batch operations" tab

- Create a new batch replication job

- Follow the wizard to set up the job for existing objects

Method 4: Using the AWS Management Console

If you prefer a visual interface or need to transfer just a few files, the AWS Management Console is your simplest option.

Manual Copy and Paste Method

- Sign in to the AWS Management Console and open the S3 service

- Navigate to your source bucket and select the objects you want to copy

- Click the "Actions" button and select "Copy"

- Navigate to your destination bucket

- Click "Actions" and select "Paste"

Bulk Upload/Download Limitations

The console method works well for small transfers but has limitations:

- You can only select up to 1,000 objects at once

- Large file transfers might time out

- No automatic retry mechanism for failed transfers

- Less efficient for transferring many small files

Using Folder Actions

For transferring entire folders:

- Navigate to the folder in your source bucket

- Select the folder

- Click "Actions" and select "Copy"

- Navigate to your destination bucket

- Click "Actions" and select "Paste"

Method 5: Using AWS SDK with Custom Scripts

For more control and automation, you can write custom scripts using AWS SDKs. This is perfect for recurring transfers or complex migration scenarios.

Python Script Example with Boto3

Here's a simple Python script using the Boto3 library to copy objects between buckets:

import boto3

def copy_between_buckets(source_bucket, destination_bucket, prefix=""):

s3 = boto3.client('s3')

# List objects in source bucket

paginator = s3.get_paginator('list_objects_v2')

pages = paginator.paginate(Bucket=source_bucket, Prefix=prefix)

for page within pages:

If "Contents" is on page:

for obj in page["Contents"]:

source_key = obj["Key"]

print(f"Copying {source_key}")

# Copy object to destination bucket

copy_source = {'Bucket': source_bucket, 'Key': source_key}

s3.copy_object(

CopySource=copy_source,

Bucket=destination_bucket,

Key=source_key

)

print("Copy completed!")

# Example usage

copy_between_buckets('my-source-bucket', 'my-destination-bucket')

Node.js Example

If you prefer JavaScript, here's a Node.js example using the AWS SDK:

const AWS = require('aws-sdk');

const s3 = new AWS.S3();

async function copyBucketObjects(sourceBucket, destBucket, prefix = '') {

try {

// List all objects in the source bucket

const listParams = {

Bucket: sourceBucket,

Prefix: prefix

};

const listedObjects = await s3.listObjectsV2(listParams).promise();

if (listedObjects.Contents.length === 0) {

console.log('No objects found in source bucket');

return;

}

// Copy each object to the destination bucket

const copyPromises = listedObjects.Contents.map(object => {

const copyParams = {

CopySource: `${sourceBucket}/${object.Key}`,

Bucket: destBucket,

Key: object.Key

};

console.log(`Copying: ${object.Key}`);

return s3.copyObject(copyParams).promise();

});

await Promise.all(copyPromises);

console.log('Copy completed successfully!');

// If there are more objects, recursively call this function

if (listedObjects.IsTruncated) {

await copyBucketObjects(

sourceBucket,

destBucket,

prefix

listedObjects. NextContinuationToken

);

}

} catch (err) {

console.error('Error copying objects:', err);

}

}

// Example usage

copyBucketObjects('my-source-bucket', 'my-destination-bucket');

Custom Script Benefits

Using custom scripts offers several advantages:

- Complete control over the transfer process

- Ability to add custom logic (like transformations)

- Better error handling and logging

- Can be scheduled or triggered by events

- Works with any size of data transfer

Comparing the 5 Methods: Which One Should You Choose?

| Method | Best For | Ease of Use | Scalability | Automation |

|---|---|---|---|---|

| AWS CLI | Medium-sized transfers, command-line users | Medium | High | High |

| S3 Batch Operations | Large-scale transfers, millions of objects | Medium | Very High | High |

| S3 Replication | Ongoing synchronization, disaster recovery | Easy | High | Very High |

| AWS Console | Small transfers, occasional use | Very Easy | Low | None |

| Custom Scripts | Complex transfers, special requirements | Hard | High | Very High |

Decision Factors to Consider

- Data volume : For large volumes, prefer CLI, Batch Operations, or custom scripts

- Frequency : For recurring transfers, use Replication or automated scripts

- Technical skill : Console for beginners, CLI for intermediate, SDK for advanced users

- Speed requirements : Batch Operations and CLI are typically faster for large transfers

- Special needs : Custom scripts if you need transformations or complex logic

Best Practices for S3 to S3 Transfers

Minimizing Transfer Costs

Even though the methods we've discussed are free in terms of service costs, data transfer can still incur charges. Here's how to minimize them:

- Transfer within the same region to avoid cross-region data transfer fees

- Use S3 Transfer Acceleration for faster cross-region transfers (although this has an additional cost)

- Batch small files together before transferring to reduce request costs

- Consider compressing data before transferring to reduce the total bytes transferred

Ensuring Data Integrity

To ensure your data arrives intact:

- Use the

--dryrunoption with AWS CLI to test commands before executing - Verify object counts and sizes after transfer

- Enable versioning on buckets to prevent accidental overwrites

- Use checksums to verify file integrity

Performance Optimization

To speed up your transfers:

- Use multipart uploads for large files

- Run multiple transfer operations in parallel

- Consider using S3 Transfer Acceleration for cross-region transfers

- For CLI transfers, adjust the

max_concurrent_requestsandmultipart_thresholdin your AWS config

Troubleshooting Common S3 Transfer Issues

Permission Errors

If you encounter "Access Denied" errors:

- Check bucket policies on both source and destination buckets

- Verify IAM permissions for the user or role performing the transfer

- Ensure you have both read permissions on the source and write permissions on the destination

- Check for any bucket ACLs that might be restricting access

Transfer Timeouts

For timeout issues:

- Break large transfers into smaller batches

- Use the

--no-verify-ssloption with AWS CLI if you're having SSL verification issues - Increase timeout settings in your scripts or tools

- Consider using S3 Batch Operations for very large transfers

Handling Incomplete Transfers

To deal with incomplete transfers:

- Use the

--continueflag with AWS CLI to resume interrupted downloads - For CLI sync, run the command again to pick up where it left off

- Implement retry logic in custom scripts

- Use S3 Batch Operations which automatically handles retries

Quick Tip to ensure your videos never go missing

Videos are precious memories and all of us never want to lose them to hard disk crashes or missing drives. PicBackMan is the easiest and simplest way to keep your videos safely backed up in one or more online accounts.

Simply download PicBackMan (it's free!) , register your account, connect to your online store and tell PicBackMan where your videos are - PicBackMan does the rest, automatically. It bulk uploads all videos and keeps looking for new ones and uploads those too. You don't have to ever touch it.

Conclusion

Transferring data between S3 buckets doesn't have to be complicated or expensive. The five methods we've covered—AWS CLI, S3 Batch Operations, S3 Replication, AWS Management Console, and custom scripts—give you plenty of options to handle any transfer scenario without incurring additional service costs.

For small, one-time transfers, the AWS Console is perfect. For regular or larger transfers, AWS CLI or S3 Batch Operations provide better scalability. If you need ongoing synchronization, S3 Replication is your best bet. And for complex scenarios requiring special handling, custom scripts give you complete control.

By following the best practices and troubleshooting tips outlined in this guide, you'll be able to move your data efficiently while minimizing costs and avoiding common pitfalls.

Frequently Asked Questions

1. Will I be charged for transferring data between S3 buckets?

If you're transferring between buckets in the same AWS region, there's no data transfer charge. However, cross-region transfers will incur standard AWS data transfer fees. Request costs (PUT, COPY, etc.) still apply regardless of region.

2. How can I transfer encrypted objects between S3 buckets?

For objects encrypted with SSE-S3 or SSE-KMS, all the methods described will work. The objects remain encrypted during transfer. For SSE-KMS, ensure the destination has the right KMS key permissions. Objects with customer-provided keys (SSE-C) must be decrypted and re-encrypted during transfer using custom scripts.

3. What's the fastest way to transfer a large amount of data between S3 buckets?

For very large transfers, S3 Batch Operations typically offers the best performance as it's optimized for scale and runs directly within the AWS infrastructure. For cross-region transfers, enabling S3 Transfer Acceleration can significantly improve speed.

4. Can I schedule regular transfers between S3 buckets?

Yes, you can schedule regular transfers using AWS Lambda functions triggered by EventBridge (formerly CloudWatch Events) on a schedule. Alternatively, S3 Replication provides continuous, automatic copying of new objects as they're added to the source bucket.

5. How do I verify that all my files were transferred correctly?

You can verify transfers by comparing object counts and total storage size between buckets. For more detailed verification, use the AWS CLI to list objects in both buckets and compare the results, or write a script that checks each object's ETag (which serves as a checksum for most objects). S3 Batch Operations also provides detailed completion reports.